Webapp keygen

Webapp keygen

Installation and Management Guide

Installation

Overall Setup

CryoSPARC is a backend and frontend software system that provides data processing and image analysis capabilities for single particle cryo-EM, along with a browser based user interface and command line tools.

The cryoSPARC system is designed to run on several system architectures. The system is based on a master-worker pattern. The master processes run together on one node (master node) and worker processes can be spawned on any available worker nodes (including the master node if it is also registered as a worker). The master node can also spawn or submit jobs to a cluster scheduler system (SLURM etc). This allows running the program on a single workstation, collection of unmanaged workstations/nodes, cluster of managed nodes (running a cluster scheduler like SLURM/PBS/Torque/etc) or heterogeneous mix of the above.

The major requirement for installation is that all nodes (including the master) be able to access the same shared file system(s) at the same absolute path. These file systems (typically cluster file systems or NFS mounts) will be used for loading input data into jobs running on various nodes, as well as saving output data from jobs.

The cryoSPARC system is specifically designed not to require root access to install or use. The reason for this is to avoid security vulnerabilities that can occur when a network application (web interface, database, etc) is hosted as the root user. For this reason, the cryoSPARC system must be installed and run as a regular unix user (from now on referred to as ), and all input and output file locations must be readable and writable as this user. In particular, this means that project input and output directories that are stored within a regular user's home directory need to be accessible by , or else (more commonly) another location on a shared file system must be used for cryosparc project directories.

If you are installing the cryoSPARC system for use by a number of users (for example within a lab), there are two ways to do so, described below. Each is also described in more detail in the rest of this document.

1) Create a new regular user () and install and run cryoSPARC as this user. Create a cryoSPARC project directory (on a shared file system) where project data will be stored, and create sub-directories for each lab member. If extra security is necessary, use UNIX group priviledges to make each sub-directory read/writeable only by and the appropriate lab member's UNIX account. Within the cryoSPARC command-line interface, create a cryosparc user account for each lab member, and have each lab member create their projects within their respective project directories. This method relies on the cryoSPARC webapp for security to limit each user to seeing only their own projects. This is not guaranteed security, and malicious users who try hard enough will be able to modify the system to be able to see the projects and results of other users. 2) If each user must be guaranteed complete isolation and security of their projects, each user must install cryoSPARC independently within their own home directories. Projects can be kept private within user home directories as well, using UNIX permissions. Multiple single-user cryoSPARC master processes can be run on the same master node, and they can all submit jobs to the same cluster scheduler system. This method relies on the UNIX system for security and is more tedious to manage but provides stronger access restrictions. Each user will need to have their own license key in this case.

CryoSPARC worker processes run on worker nodes to actually carry out computational workloads, including all GPU-based jobs. Some job types (interactive jobs, visualization jobs, etc) also run directly on the master node without requiring a separate process to be spawned.

Worker nodes can be installed with the following options:

- GPU(s) available: at least one worker must have GPUs available to be able to run the complete set of cryoSPARC jobs, but non-GPU workers can also be connected to run CPU-only jobs. Different workers can have different CUDA versions, but installation is simplest if all have the same version.

- SSD scratch space available: SSD space is optional on a per-worker node basis, but highly recommended for worker nodes that will be running refinements and reconstructions using particle images. Nodes reserved for pre-processing (motion correction, particle picking, CTF estimation, etc) do not need to have an SSD.

Installation

Prerequisites

The cryoSPARC system is pictured below:

The major requirements for networking and infrastructure are:

- Shared file system. All nodes must have access to the same shared file system(s) where input and project directories are stored.

- A reliable (i.e. redundant) file system, not necessarily shared, available on the master node for storage of the cryoSPARC database.

- HTTP access from user's browser to master node. The master node will run a web application at port 39000 (configurable) that all user machines (laptops, etc) must be able to access via HTTP. For remote access, users can use a VPN into your local network, SSH port tunnelling, or X forwarding (last resort, not recommended).

- SSH access between the master node and each standalone worker node. The account should be set up to be able to SSH without password (using a key-pair) into all non-cluster worker nodes.

- SSH access between the master node and a cluster head node. If the master node itself is allowed to directly run cluster scheduler commands (qsub, etc) then this is not necessary. Otherwise, a cluster head node that can launch jobs must be SSH (without password) accessible for from the master node.

- TCP access between every worker node (standalone or cluster) and the master node on ports 39000-39010 (configurable) for command and database connections.

- Internet access (via HTTP) from the master node. Note: worker and cluster nodes do not need internet access.

- Every node should have a consistently resolvable hostname on the network (long name is preferred, i.e rather than just )

All nodes:

- Modern Linux OS

- CentOS 6 +, Ubuntu 12.04 +, etc

- Essentially need GLIBC >= 2.12 and gcc >= 4.4 Note: On a fresh Linux installation, you may need to run

- bash as the default shell for

The master node:

- Must have 8GB+ RAM, 2+ CPUs

- GPU/SSD not necessary

- Can double as a worker if it also has GPUs/SSD

- Note: on clusters, the master node can be a regular cluster node (or even a login node) if this makes networking requirements easier, but the master processes must be run continuously so if using a regular cluster node, the node probably needs to be requested from your scheduler in interactive mode or for an indefinitely running job. If the master process is started on one cluster node, then stopped, then later started on a different cluster node, everything should run fine. However, you should not attempt to start the same master process (same port number/database/license ID) on multiple nodes simultaneously as this might cause database corruption.

Standalone worker nodes:

- logged in to the master node must be able to SSH to the worker node without a password. Note: it is not stricly necessary that the user account name/UID on the worker node be identical to the master node, if the shared-file-system requirement is met, but installation becomes more complex in that case.

- at least one node must have a GPU and preferrably an SSD

- no standalone workers are required if running only on a cluster

Cluster worker nodes:

- SSH access from the master node is not required

- Must be able to directly connect via TCP (HTTP and MongoDB) to the master node, on ports 39000-39010 (configurable)

- Must have CUDA installed (version ≥9.2, ≤10.2 required, CUDA 11 is not supported)

Installation

Optimal Setup Suggestions

Disks & compressions

- Fast disks are a necessity for processing cryo-EM data efficiently. Fast sequential read/write throughput is needed during preprocessing stages where the volume of data is very large (10s of TB) while the amount of computation is relatively low (sequential processing for motion correction, CTF estimation, particle picking etc.)

- Typically users use spinning disk arrays (RAID) to store large raw data files, and often cluster file systems are used for larger systems. As a rule of thumb, to saturate a 4-GPU machine during preprocessing, sustained sequential read of 1000MB/s is required.

- Compression can greatly reduce the amount of data stored in movie files, and also greatly speeds up preprocessing because decompression is actually faster than reading uncompressed data straight from disk. Typically, counting-mode movie files are stored in LZW compressed TIFF format without gain correction, so that the gain reference file is stored separately and must be applied on-the-fly during process (which is supported by cryoSPARC). Compressing gain corrected movies can often result in much worse compression ratios than compressing pre-gain corrected (integer count) data.

- cryoSPARC supports LZW compressed TIFF format and BZ2 compressed MRC format natively. In either case the gain reference must be supplied as an MRC file. Both TIFF and BZ2 compression are implemented as multicore decompression streams on-the-fly.

SSDs

- For classification, refinement, and reconstruction jobs that deal with particles, having local SSDs on worker nodes can significantly speed up computation, as many algorithms rely on random-access patterns and multiple passes though the data, rather than sequentially reading the data once.

- SSDs of 1TB+ are recommended to be able to store the largest particle stacks.

- SSD caching can be turned off if desired, for the job types that use it.

- cryoSPARC manages the SSD cache on each worker node transparently - files are cached, re-used across jobs in the same project, and deleted if more space is needed.

- For more information on using SSDs in cryoSPARC, see SSD Caching in cryoSPARC

GPUs, CPUs, RAM

- Only NVIDIA GPUs are supported, compute capability 3.5+ is required

- The GPU RAM in each GPU limits the maximum box size allowed in several processing types

- Typically, a 12GB GPU can handle a box size up to 700^3

- Older GPUs can often perform almost equally as well as the newest, fastest GPUs because most computations in cryoSPARC are not bottlenecked by GPU compute speed, but rather by memory bandwidth and disk IO speed. Many of our benchmarks are done on NVIDIA Tesla K40s which are now (2018) almost 5 years old.

- Multiple CPUs are needed per GPU in each worker system, at least 2 CPUs per GPU, though more is better.

- System RAM of 32GB per GPU in a system is recommended. Faster RAM (DDR4) can often speed up processing.

Quick Installation

Single workstation

The following commands will install cryoSPARC on to a single machine serving as both the master and worker node by specifying the option. This installation will use all default settings, and enable all GPUs on the workstation. For a more complex or custom installation, see the guides below.

Preparation

Prepare the following items before you start. The name of each item is used as a placeholder in the below commands, and the values given after the equals sign are examples.

- The root directory where cryoSPARC code and dependencies will be installed.

- the license ID issued to you

- only a single cryoSPARC master instance can be running per license key.

- the full path to the worker directory, which was downloaded and extracted

- to get the full path, into the cryosparc2_worker directory and run the command:

- path to the CUDA installation directory on the worker node

- note: this path should not be the directory, but the directory that contains both and subdirectories

- path on the worker node to a writable directory residing on the local SSD

- this is optional, and if omitted, also add the option to indicate that the worker node does not have an SSD

- login email address for first cryoSPARC webapp account

- this will become an admin account in the user interface

- temporary password that will be created for the account

- full name of the initial admin account to be created

- ensure the name is quoted

- [Optional]

- The base port number for this cryoSPARC instance. Do not install cryosparc master on the same machine multiple times with the same port number - this can cause database errors. 39000 is the default.

- specify port number by adding to the command

SSH into the workstation as (the UNIX user that will run all cryosparc processes, could be your personal account). The following assumes bash is your shell.

Example

After completing the above, navigate your browser to to access the cryoSPARC user interface.

Installation

Master

Prepare the following items before you start. The name of each item is used as a placeholder in the below commands, and the values given after the equals sign are examples.

- The long-form hostname of the machine that will be acting as the master node.

- If the machine is consistently identifiable on your network with just the short hostname (in this case ) then that is sufficient.

- You can usually get the long name using the command: , and this is the that is used if the option is left out below.

- a regular (non-root) UNIX user account selected to run the cryosparc processes.

- See above for how to decide which user account this should be, depending on your scenario

- The directory where cryoSPARC code and dependencies will be installed.

- A directory that resides somewhere accessible to the master node, on a reliable (possible shared) file system

- The cryoSPARC database will be stored here.

- The license ID issued to you

- Only a single cryoSPARC master instance can be running per license key.

- [Optional]

- The base port number for this cryoSPARC instance. Do not install cryosparc master on the same machine multiple times with the same port number - this can cause database errors. 39000 is the default and will be used if the option is left out below.

- login email address for first cryoSPARC webapp account

- This will become an admin account in the user interface

- password that will be created for the account

SSH in to the machine as , and then run the following commands:

More arguments to :

- :this instructs the installer and the cryosparc master node to ignore SSL certificate errors when connecting to HTTPS endpoints. This is useful if you are behind a enterprise network using SSL injection.

- :force allow install as root

- :do not ask for any user input confirmations

Note: As mentioned in Prerequisites, the master node uses SSH to communicate with worker nodes. You may use an ssh config file on the master node to specify non-default options (i.e. port) that the master is to use while connecting to the worker node.

Sample :

Installation

Standalone worker

Installation of the cryoSPARC worker module can be done once and used by multiple worker nodes, if the installation is done in a shared location. This is helpful when dealing with multiple standalone workers.

The requirement for multiple standalone workers to share the same copy of cryoSPARC worker installation is that they all have the same CUDA version and location of CUDA library (usually ). GPUs within each worker can be enabled/disabled independently, in order to limit the cryoSPARC scheduler to only using the available GPUs. Each worker node can also be configured to indicate whether or not is has a local SSD that can be used to cache particle images.

Prepare the following items before you start:

- Start the cryosparc master process on the master node if not already started

- (as above)

- The long-form hostname of the machine that is the master node.

- The port number that was used to install the master process, 39000 by default.

- The long-form hostname of the machine that will be running jobs as the worker.

- Ensure that SSH keys are set up for the account to SSH between the master node and the worker node without a password.

- Generate an RSA key pair (if not already done) (use no passphrase and the default key file location)

- On most systems you can log into the master node and do

- Path to the CUDA installation directory on the worker node

- Note: this path should not be the directory, but the directory that contains both and subdirectories

- This is optional, and if omitted, also add the option to indicate that the worker node does not have any GPUs [As of v2.0.20, no-GPU installation is not yet supported. It will be in a future version.]

- Path on the worker node to a writable directory residing on the local SSD

- This is optional, and if omitted, also add the option to indicate that the worker node does not have an SSD

SSH in to the worker node and execute the following commands to install the cryosparc worker binaries:

Follow the below instructions on each worker node that will use this worker installation.

This will connect the worker node and register it with the master node, allowing it to be used for running jobs. By default, all GPUs will be enabled, the SSD cache will be enabled with no quota/limit, and the new worker node will be added to the default scheduler lane.

For advanced configuration:

This will list the available GPUs on the worker node, and their corresponding numbers. Use this list to decide which GPUs you wish to enable using the flag below, or leave this flag out to enable all GPUs.

Use advanced options with the connect command, or use the flag to update an existing configuration:

Installation

Cluster

For a cluster installation, installation of the master node is the same as above. Installation of the worker is done only once, and the same worker installation is used by any cluster nodes that run jobs. Thus, all cluster nodes must have the same CUDA version, CUDA path and SSD path (if any). Once installed, the cluster must be registered with the master process, including providing template job submission commands and scripts that the master process will use to submit jobs to the cluster scheduler.

The cluster worker installation needs to be run on a node that either is a cluster worker, or has the same configuration as cluster workers, to ensure that CUDA compilation will be successful at install time.

To install on a cluster, SSH into one of the cluster worker nodes and execute the following:

To register the cluster, you will need to provide cryoSPARC with template strings used to construct cluster commands (like , , etc or their equivalents for your system), as well as a template string to construct appropriate cluster submission scripts for your system. The tempate engine is used to generate cluster submission/monitoring commands as well as submission scripts for each job.

The following fields are required to be defined as template strings in the configuration of a cluster. Examples for PBS are given here, but you can use any command required for your particular cluster scheduler:

Along with the above commands, a complete cluster configuration requires a template cluster submission script. The script should be able to send jobs into your cluster scheduler queue marking them with the appropriate hardware requirements. The cryoSPARC internal scheduler will take care of submitting jobs as their inputs become ready. The following variables are available to be used within a cluster submisison script template. Examples of templates, for use as a starting point, can be generated with the commands explained below.

Note: The cryoSPARC scheduler does not assume control over GPU allocation when spawning jobs on a cluster. The number of GPUs required is provided as a template variable, but either your submission script, or your cluster scheduler itself is responsible for assigning GPU device indices to each job spawned. The actual cryoSPARC worker processes that use one or more GPUs on a cluster will simply begin using device 0, then 1, then 2, etc. Therefore, the simplest way to get GPUs correctly allocated is to ensure that your cluster scheduler or submission script sets the environment variable, so that device 0 is always the first GPU that the particular spawned job should use. The example script for clusters (generated as below) shows now to check which GPUs are available at runtime, and automatically select the next available device.

To actually create or set a configuration for a cluster in cryoSPARC, use the following commands. the , , and commands read two files from the current working directory: and

Installation

Accessing the cryoSPARC UI Remotely via SSH-Tunneling

By default, cryoSPARC user interface pages are served by a web server running on the same machine where cryoSPARC is installed, at port 39000. This web server is responsible for displaying datasets, experiments, streaming real time results, user accounts, updating, etc.

Often, the compute server running cryoSPARC may be behind a firewall with no direct access from the outside world to port 39000, but still accessible via SSH. When you want to access cryoSPARC from home or elsewhere to be able to run jobs and view results, it can be convenient to connect to the web server via an SSH tunnel. This post assumes you are trying to access cryoSPARC from a Linux/UNIX/MacOS local system.

When you can connect to the compute node with a single SSH command

This scenario assumes your network setup looks like this:

CryoSPARC is running on the remote host, and the firewall only allows SSH connections (port 22). Since you can directly ssh into the compute node, use the following steps:

Set up SSH keys for password-less access (only if you currently need to enter your password each time you ssh into the compute node).

If you do not already have SSH keys generated on your local machine, use to do so. Open a terminal prompt, and enter:

Note: this will create an RSA key-pair with no passphrase in the default location.

Copy the RSA public key to the remote compute node for password-less login:

Note: and are your username and the hostname that you use to SSH into your compute node. This step will ask for your password.

Start an SSH tunnel to expose port 39000 from your compute node to your local machine.

Note: the flag tells to run in the background, so you can close the terminal window after running this command, and the tunnel will stay open.

Now, open your browser (Chrome) and navigate to . You should be presented with the cryoSPARC login page.

When you have to SSH through multiple servers to reach your cryoSPARC compute node

This scenario assumes your network setup looks like this:

CryoSPARC is running on the remote host, behind an ssh server, both of which have firewalls. The firewalls only allow SSH connections (port 22). In this case, you can use multi-hop SSH to create a tunnel to the remote host to expose port 39000:

Set up SSH keys for password-less access from localhost -> ssh server

If you do not already have SSH keys generated on your local machine, use to do so. Open a terminal prompt, and enter

Note: this will create an RSA key-pair with no passphrase in the default location.

Copy the RSA public key to the ssh server for password-less login:

Note: and are your username and the hostname that you use for SSH from the outside world. This step will ask for your password.

Set up SSH keys for password-less access from ssh server -> remote hostNote: this step is not necessary if you can already ssh without a password to the compute node from the ssh server.

- If you do not already have SSH keys generated on your ssh server, use to do so. Open a terminal prompt, and enter

- Once logged into the ssh server, enter:Note: this will create an RSA key-pair with no passphrase in the default location.

- Copy the RSA public key to the remote compute node for password-less login:Note: and are your username and the hostname that you use to SSH into the compute node itself. This step will ask for your password.

Set up a multi-hop connection from your local host to the remote host. To do this, open the file (or create it if it doesn't exist) and add the following lines:

Replace with a short name you will use to refer to the remote compute node. Replace and with the actual user/hostname of the compute node that you would use to connect to it from the ssh server. Replace and with the user/hostname of the ssh server. Save the file.

Start an SSH tunnel to expose port 39000 from your compute node to your local machine.

Note: the flag tells to run in the background, so you can close the terminal window after running this command, and the tunnel will stay open.

Now, open your browser (Chrome) and navigate to . You should be presented with the cryoSPARC login page.

Management and Monitoring

The command line utility serves as the command line entry point for all administrative and advanced usage tasks. The command is available in your if you are logged in as the (as mentioned in the installation guide) if the option to modify your was allowed at install time. Otherwise, to use the command, navigate to your cryosparc master installation directory, and find the tool at . (Note: is a bash script with various capabilities)

Some of the general command available are:

- - prints help message

- - prints out a list of environment variables that should be set in a shell to replicate the exact environment used to run cryosparc processes. The typical way to use this command is which causes the current shell to execute the output of the command and therefore define all needed variables. This is the way to get access to for example the distribution packed with cryosparc.

- - runs the command using the cryosparc cli (more detail below)

- drops the current shell into an interactive cryosparc shell that connects to the master processes and allows you to interactively run cli commands

- - creates a new user account that can be accessed in the graphical web UI.

- - reset the password for indicated user with the new provided

- - update a user profile to change the user name or set admin priviledges (by default new users are not admin users, other than the first created user account.)

- - download test data (subset of EMPIAR 10025) to the current working directory.

Management and Monitoring

Status

The cryoSPARC master process(es) are controlled by a supervisor process that ensures that the correct processes are launched and running at the correct times. The status of the cryoSPARC master system is controlled by the command line utility, which also serves as the command line entry point for all administrative and advanced usage tasks.

The commands relating to status are described below:

- - prints out the current status of the cryosparc master system, including the status of all individual processes (database, webapp, command_core, etc). Also prints out configuration environment variables.

- - starts the cryosparc instance if stopped. This will cause the database, command, webapp etc processes to start up. Once these processes are started, they are run in the background, so the current shell can be closed and the web UI will continue to run, as will jobs that are spawned.

- - stops the cryosparc instance if running. This will gracefully kill all the master processes, and will cause any running jobs (potentially on other nodes) to fail.

Management and Monitoring

Logs

The cryosparc master system keeps track of several log streams to aid in debugging if anything is not working. You can quickly see the debugging logs for various master processes with the following command:

- - begins tailing the log for which can be either , , or .

- - begins tailing the job log for job JXX in project PX (this shows the stdout stream from the job, which is different than the log outputs that are displayed in the webapp).

Management and Monitoring

Backup and Restore

You can backup the cryoSPARC database (not including inputs/outputs/raw data files etc.) using the command. The command has two optional parameters:

- : path to the output directory where the database dump will be saved. Defaults to

- : name of the output file. Defaults to

To restore the database from a backup created with the command above, you can use .

Management and Monitoring

Updating

Updates to cryoSPARC are deployed very frequently, approximately every 2 weeks. When a new update is deployed, users will see a new version appear on the changelog in the dashboard of the web UI. Updates can be done using the process described below. Updates are usually seamless, but do require any currently running jobs to be stopped, since the cryosparc instance will be shut down during update.

Checking for updates

This will check for updates with the cryoSPARC deployment servers, and indicate whether an update is available. You can also use to get a full list of available versions (including old versions in case of a downgrade).

Automatic update

will begin the standard automatic update process, by default updating to the lastest available version. The cryosparc instance will be shut down, and then new versions of both the master and worker installations will be downloaded. The master installation will be untarred (this can take several minutes on slower disks as there are many files) and will replace the current version. If dependencies have changed, updating may trigger a re-installation of dependencies (automatically).

Once master update is complete, the new master instance will start up and then each registered standalone worker node will be updated automatically, by transferring (via scp) the downloaded worker installation file to the worker node, and then untarring and updating dependencies. If multiple standalone worker nodes are registered that all share the same worker installation, the update will only be applied once.

Cluster installations do not update automatically, because not all clusters have internet access on worker nodes. Once the automatic update above is complete, navigate to the cryosparc master installation directory, inside which you will find a file named . This file is the latest downloaded update for worker installations. Copy this file (via scp) inside the directory where the cluster worker installation is installed (so that it sits alongside the and folders) and then within that installation directory, run

This will cause the worker installation at that location to look for the above copied file, untar it, and update itself including dependencies.

Attempting to run a mismatched version of cryosparc master and workers will cause an error.

Manual update

You can update to a specific version exactly as described above, but with the command

use to see the list of available versions.

Forced update

You can force cryoSPARC to install the latest version available as if it were a fresh instance with the commands

on the master node, then

on each worker node

This command bypasses version checks ( will not continue an update if it detects the current instance is already on the latest version) and dependency checks (the dependency manager will not install a dependency if the binaries on the current instance are already up to date), allowing a user to force cryoSPARC to reinstall all files and dependencies. This can help if an update somehow fails halfway.

Note: You cannot specify a version to install when overriding the update manager. Only the latest verison of cryoSPARC will be installed.

Management and Monitoring

Advanced

Notes:

- master and worker installation directories cannot be moved to a different absolute path once installed. To move the installation, dump the database, install cryoSPARC again in the new location, and restore the database.

fastify-jwt-webapp

fastify-jwt-webapp brings the security and simplicity of JSON Web Tokens to your fastify-based web apps, single- and multi-paged "traditional" applications are the target of this plugin, although it does not impose a server-side session to accomplish being "logged-in" from request to request. Rather, a JWT is simply stored in a client-side cookie and retrieved and verified with each request after successful login. This plugin does not assume your knowledge of JWTs themselves, but knowledge of the workflows involved, particularly as it relates to your provider, are assumed. (this plugin uses a -like workflow)

To see fastify-jwt-webapp in the wild check out my website.

Example

index.js

config.js

Cookie

Being "logged-in" is achieved by passing along the JWT along with each request, as is typical with APIs (via the header). fastify-jwt-webapp does this by storing the JWT in a cookie (, "token" by default). By default this cookie is , meaning that it will only be sent by the browser back to your app if the connection is secure. To change this behavior set to . DO NOT DO THIS IN PRODUCTION. YOU HAVE BEEN WARNED. To see cookie options please see .

Refresh Tokens

This plugin does not treat refresh tokens, but there's no reason that you couldn't implement this functionality yourself. #sorrynotsorry

JWKS Caching

Fetching the JWKS is by far the most taxing part of this whole process; often making your request 10x slower, that's just the cost of doing business with JWTs in this context because the JWT needs to be verified on each request, and that entails having the public key, thus fetching the JWKS from on every single request. Fortunately, a JWKS doesn't change particularly frequently, fastify-jwt-webapp can cache the JWKS for milliseconds, even a value like (10 seconds) will, in the long-term, add up to much less time spent fetching the JWKS and significantly snappier requests (well, at least until milliseconds after the caching request, at which point the cache will be refreshed for another milliseconds).

Options

| Key | Default | Description |

|---|---|---|

| required | This plugin makes use of "templates" that control the parameters that are sent to the IdP. Can be or right now. | |

| required | Your client ID. | |

| required | You client secret. | |

| required | The URL that your IdP uses for login, , for example. | |

| required | The URL that your IdP uses for exchanging an for access token(s), in this case a JWT, , for example. | |

| required | The URL that serves your JWKS, , for example. | |

| required | fastify-jwt-webapp works by setting a cookie, so you need to specify the domain for which the cookie will be sent. | |

| required | This is the URL to which an IdP should redirect in order to process the successful authentication, , for example. | |

| fastify-jwt-webapp creates several endpoints in your application, this is one of them, it processes the stuff that your IdP sends over after successful authentication, by default the endpoint is , but you can change that with this parameter. This is very related to the option mentioned above. | ||

| This is the second endpoint that fastify-jwt-webapp adds, it redirects to (with some other stuff along the way), it's by default, but you can change it to anything, it's just aesthetic. | ||

| Where do you get redirected after successful authentication? , that's where. | ||

| An array of endpoint paths to be excluded from the actions of the plugin (unauthenticated routes). | ||

| After successful authentication, the fastify request object will be decorated with the payload of the JWT, you can control that decorator here, for example. | ||

| is a totally optional function with signature that is called after successful authentication, it has absolutely no effect on the plugin's actual functionality. | ||

| (disabled) | Will cache the JWKS for milliseconds after the first request that needs it. | |

| If set to the plugin will redirect to if a JWT is present, but not valid. |

Current Tags

- 0.11.1 ... latest (a month ago)

39 Versions

- 0.11.1 ... a month ago

- 0.11.0 ... 7 months ago

- 0.10.3 ... 7 months ago

- 0.10.2 ... a year ago

- 0.10.1 ... a year ago

- 0.10.0 ... a year ago

- 0.9.6 ... a year ago

- 0.9.5 ... a year ago

- 0.9.4 ... 2 years ago

- 0.9.3 ... 2 years ago

- 0.9.2 ... 2 years ago

- 0.9.1 ... 2 years ago

- 0.9.0 ... 2 years ago

- 0.8.0 ... 2 years ago

- 0.7.4 ... 2 years ago

- 0.7.3 ... 2 years ago

- 0.7.2 ... 2 years ago

- 0.7.1 ... 2 years ago

- 0.7.0 ... 2 years ago

- 0.6.1 ... 2 years ago

- 0.6.0 ... 2 years ago

- 0.5.0 ... 2 years ago

- 0.4.5 ... 2 years ago

- 0.4.4 ... 2 years ago

- 0.4.3 ... 2 years ago

- 0.4.2 ... 2 years ago

- 0.4.1 ... 2 years ago

- 0.4.0 ... 2 years ago

- 0.3.0 ... 2 years ago

- 0.2.5 ... 2 years ago

- 0.2.4 ... 2 years ago

- 0.2.2 ... 2 years ago

- 0.2.1 ... 2 years ago

- 0.2.0 ... 2 years ago

- 0.1.2 ... 2 years ago

- 0.1.1 ... 2 years ago

- 0.1.0 ... 2 years ago

- 0.0.2 ... 2 years ago

- 0.0.1 ... 2 years ago

npm

Single-page web chat application for Tinode. The app is built on React. The Tinode javascript SDK has no external dependencies. Overall it's a lot like open source WhatsApp or Telegram web apps.

Although the app is generally usable, keep in mind that this is work in progress. Some bugs probably exist, some features are missing. The app was tested in the latest Chrome & Firefox only. NPM package is available.

Try possibly newer or older version live at https://web.tinode.co/.

For demo access and other instructions see here.

Installing and running

This is NOT a standalone app, this is just a frontend, a client. It requires a backend. See installation instructions.

Getting support

Internationalization

The app is fully internationalized using React-Intl. The UI language is selected automatically from the language specified by the browser. A specific language can be forced by adding parameter to the URL when starting the app, e.g. https://web.tinode.co/#?hl=ru.

As of the time of this writing the following translations exist:

- English

- Chinese (simplified)

- German

- Korean

- Russian

- Spanish

More translations are welcome. Send a pull request with a json file with translated strings. Take a look at English, Russian, or Simplified Chinese translations for guidance.

Not done yet

- End-to-End encryption.

- Emoji support is weak.

- Mentions, hashtags.

- Replying or forwarding messages.

- Previews not generated for videos, audio, links or docs.

Other

Push notifications

If you want to use the app with your own server and want web push notification to work you have to set them up:

- Register at https://firebase.google.com/, set up the project if you have not done so already.

- Follow instructions to create a web application https://support.google.com/firebase/answer/9326094 in your project.

- Follow instructions at https://support.google.com/firebase/answer/7015592 to get a Firebase configuration object ("Firebase SDK snippet").

- Locate in the root folder of your copy of TinodeWeb app. Copy-paste the following keys from the configuration object to : , , , (you may copy all keys).

- Copy (Project Settings -> Cloud Messaging -> Web configuration -> Web Push certificates) to field in .

- Double check that contains the following keys: , , , , . The file may contain other optional keys.

- Copy Google-provided server key to , see details here.

Responsive design

Desktop screenshot

Mobile screenshots

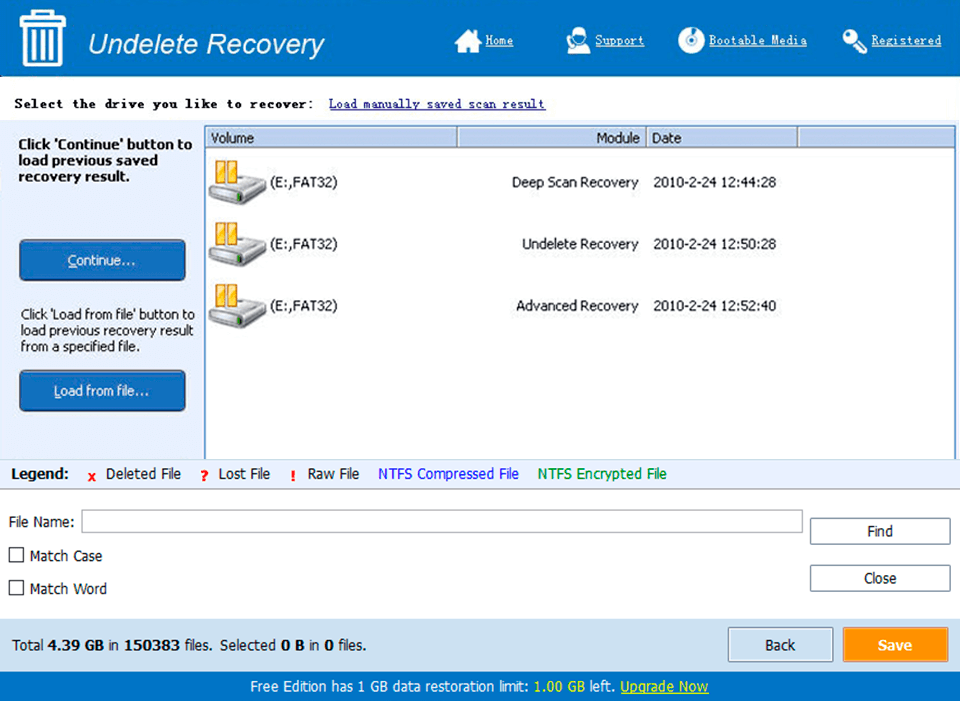

What’s New in the Webapp keygen?

Screen Shot

System Requirements for Webapp keygen

- First, download the Webapp keygen

-

You can download its setup from given links: