MyProxy keygen

MyProxy keygen

CMS Box

* Set ALLOWTOPICCHANGE = TWikiAdminGroup,Main.LCGAdminGroup

* Set ALLOWTOPICRENAME = TWikiAdminGroup,Main.LCGAdminGroup

#uncomment this if you want the page only be viewable by the internal people

#* Set ALLOWTOPICVIEW = TWikiAdminGroup,Main.LCGAdminGroup

-->

| Real machine name | cms02.lcg.cscs.ch |

|---|

Firewall requirements

| port | open to | reason |

|---|---|---|

| 3128/tcp | WN | access from WNs to FroNtier squid proxy |

| 3401/udp | 128.142.0.0/16, 188.185.0.0/17 | central SNMP monitoring of FroNtier service |

| 1094/tcp | * | access to Xrootd redirector that forwards requests to the native dCache Xrootd door |

PheDEX

Service startup, stop and status

We run multiple instances of PhEDEx. One is for Production transfers, and another for Debugging. Currently, these instances are active: Note that PhEDEx is run by theuser! I (Derek) wrote some custom init scripts which make starting and stopping much simpler than in the original:- startup scripts at . The init script will check for a valid service certificate before startup! Example: /home/phedex/init.d/phedex_Prod start /home/phedex/init.d/phedex_Debug start

- Note that startup can take many seconds due to the reconnecting to the DB being slow. Have some patience and look at the output. Agent by agent, they should come up.

- also check status via the central web site http://cmsdoc.cern.ch/cms/aprom/phedex/prod/Components::Status?view=global

- make sure that there is always a valid user proxy available to PhEDEx: voms-proxy-info -all -file /home/phedex/gridcert/proxy.cert If not, a CMS site admin must renew it (see below).

Configuration

The PhEDEx configuration can be found in :- : Passwords needed for accessing the central data base. These we receive encrypted from cms-phedex-admins@cern.ch. The file contains one section for every PhEDEx instance (Prod, Dev, ...)

- : Configuration definitions for the PhEDEx instances (including load tests)

- : defines the trivial file catalog

mappings

mappings - : site specific scripts called by the download agent

- : mapping of SRM endpoints to FTS servers (q.v. CERN Twiki

)

)

(https://gitlab.cern.ch/SITECONF/T2_CH_CSCS/

(https://gitlab.cern.ch/SITECONF/T2_CH_CSCS/ ). There is a symbolic link which points to the active PhEDEx distribution, so that the configuration files need not be changed with every update (though the link needs to be reset). Nowadays the PhEDEx SW is distributed via CVMFS, so there is no longer the need for local installations.

). There is a symbolic link which points to the active PhEDEx distribution, so that the configuration files need not be changed with every update (though the link needs to be reset). Nowadays the PhEDEx SW is distributed via CVMFS, so there is no longer the need for local installations. DB access

PhEDEx relies on a central Oracle data base at CERN. The passwords for accessing it are stored in (q.v. configuration section above).Agent Auth/Authz by grid certificates and how to renew them

The PhEDEx daemons require a valid grid proxy in to transfer files. This short-lived proxy certificate is renewed every few hours through a cron job running the command (). The renewal is based on a long-living proxy certificate of the phedex administrator that is stored on a myproxy server. So, in order to have this work, the local phedex administrator needs to deposit an own long lived proxy on the myproxy server, which is used to continually renew the local certificate. The phedex user (cron job) renews the local certificate by - presenting the old but still valid proxy certificate of the phedex admin that is about to get renewed - presenting its own proxy certificate that is produced like a normal user certificate from the user certificate in and . This certificate is actually just a copy of the and belonging to the phedex host. The myproxy server only allows registered entities to renew a another user's proxy certificate in that way (the host cert being allowed to renew a user proxy, as we are doing here). You need to have the DN of the host cert entered into the myproxy server's configuration (so our vobox host DN subject has to be registered with the myproxy service). I. e. you need to contact the responsible admins for the myproxy.cern.ch server if the hostname of the cmsvobox changes! Write a mail to Helpdesk@cern.ch Checking service grid-proxy lifetime [phedex@cms02 gridcert]$ openssl x509 -subject -dates -noout -in /home/phedex/gridcert/proxy.cert subject= /DC=ch/DC=cern/OU=Organic Units/OU=Users/CN=jpata/CN=727914/CN=Joosep Pata/CN=750275847/CN=1405542638/CN=1617046039/CN=1231007372 notBefore=Aug 16 12:00:05 2017 GMT notAfter=Aug 16 12:47:05 2017 GMT Place the long time certificate on the myproxy server [feichtinger@t3ui01 ~]$ myproxy-init -t 168 -R 'cms02.lcg.cscs.ch' -l cscs_phedex -x -k renewable -s myproxy.cern.ch -c 1500 Your identity: /DC=ch/DC=cern/OU=Organic Units/OU=Users/CN=dfeich/CN=613756/CN=Derek Feichtinger Enter GRID pass phrase for this identity: Creating proxy .................................................... Done Proxy Verify OK Your proxy is valid until: Tue Oct 24 22:35:03 2017 A proxy valid for 1500 hours (62.5 days) for user cscs_phedex now exists on myproxy.cern.ch. Testing whether delegation works on the vobox (for that also the local short-lived proxy.cert that you present to the server must be still valid): [phedex@cms02 gridcert]$ myproxy-logon -s myproxy.cern.ch -v -m cms -l cscs_phedex -a /home/phedex/gridcert/proxy.cert -o /tmp/testproxy -k renewable MyProxy v6.1 Jan 2017 PAM SASL KRB5 LDAP VOMS OCSP Attempting to connect to 188.184.64.90:7512 Successfully connected to myproxy.cern.ch:7512 using trusted certificates directory /etc/grid-security/certificates Using Proxy file (/tmp/x509up_u24024) server name: /DC=ch/DC=cern/OU=computers/CN=px502.cern.ch checking that server name is acceptable... server name matches "myproxy.cern.ch" authenticated server name is acceptable running: voms-proxy-init -valid 11:59 -vomslife 11:59 -voms cms -cert /tmp/testproxy -key /tmp/testproxy -out /tmp/testproxy -bits 2048 -noregen -proxyver=4 Contacting voms2.cern.ch:15002 [/DC=ch/DC=cern/OU=computers/CN=voms2.cern.ch] "cms"... Remote VOMS server contacted succesfully. Created proxy in /tmp/testproxy. Your proxy is valid until Wed Aug 23 22:43:17 CEST 2017 A credential has been received for user cscs_phedex in /tmp/testproxy.

The myproxy server only allows registered entities to renew a another user's proxy certificate in that way (the host cert being allowed to renew a user proxy, as we are doing here). You need to have the DN of the host cert entered into the myproxy server's configuration (so our vobox host DN subject has to be registered with the myproxy service). I. e. you need to contact the responsible admins for the myproxy.cern.ch server if the hostname of the cmsvobox changes! Write a mail to Helpdesk@cern.ch Checking service grid-proxy lifetime [phedex@cms02 gridcert]$ openssl x509 -subject -dates -noout -in /home/phedex/gridcert/proxy.cert subject= /DC=ch/DC=cern/OU=Organic Units/OU=Users/CN=jpata/CN=727914/CN=Joosep Pata/CN=750275847/CN=1405542638/CN=1617046039/CN=1231007372 notBefore=Aug 16 12:00:05 2017 GMT notAfter=Aug 16 12:47:05 2017 GMT Place the long time certificate on the myproxy server [feichtinger@t3ui01 ~]$ myproxy-init -t 168 -R 'cms02.lcg.cscs.ch' -l cscs_phedex -x -k renewable -s myproxy.cern.ch -c 1500 Your identity: /DC=ch/DC=cern/OU=Organic Units/OU=Users/CN=dfeich/CN=613756/CN=Derek Feichtinger Enter GRID pass phrase for this identity: Creating proxy .................................................... Done Proxy Verify OK Your proxy is valid until: Tue Oct 24 22:35:03 2017 A proxy valid for 1500 hours (62.5 days) for user cscs_phedex now exists on myproxy.cern.ch. Testing whether delegation works on the vobox (for that also the local short-lived proxy.cert that you present to the server must be still valid): [phedex@cms02 gridcert]$ myproxy-logon -s myproxy.cern.ch -v -m cms -l cscs_phedex -a /home/phedex/gridcert/proxy.cert -o /tmp/testproxy -k renewable MyProxy v6.1 Jan 2017 PAM SASL KRB5 LDAP VOMS OCSP Attempting to connect to 188.184.64.90:7512 Successfully connected to myproxy.cern.ch:7512 using trusted certificates directory /etc/grid-security/certificates Using Proxy file (/tmp/x509up_u24024) server name: /DC=ch/DC=cern/OU=computers/CN=px502.cern.ch checking that server name is acceptable... server name matches "myproxy.cern.ch" authenticated server name is acceptable running: voms-proxy-init -valid 11:59 -vomslife 11:59 -voms cms -cert /tmp/testproxy -key /tmp/testproxy -out /tmp/testproxy -bits 2048 -noregen -proxyver=4 Contacting voms2.cern.ch:15002 [/DC=ch/DC=cern/OU=computers/CN=voms2.cern.ch] "cms"... Remote VOMS server contacted succesfully. Created proxy in /tmp/testproxy. Your proxy is valid until Wed Aug 23 22:43:17 CEST 2017 A credential has been received for user cscs_phedex in /tmp/testproxy. - Get, or ask to CSCS to get, the X509 certificate for cms02.lcg.cscs.ch

- ask px.support@cern.ch to register the host cms02.lcg.cscs.ch X509 DN for the myproxy.cern.ch service otherwise the scripts/cron_proxy.sh logic won't work

- ask the CMS frontier squad to set up SNMP monitoring via GGUS for cms02.lcg.cscs.ch

- ask for a GitLab SSH deploy key in order to allow Puppet to clone/pull ROhttps://gitlab.cern.ch/SITECONF/T2_CH_CSCS/

; see Fabio's CMS HN Thread about the GitLab SSH deploy key mechanism.

; see Fabio's CMS HN Thread about the GitLab SSH deploy key mechanism.

and always keep both https://gitlab.cern.ch/SITECONF/T2_CH_CSCS/

and always keep both https://gitlab.cern.ch/SITECONF/T2_CH_CSCS/ and https://gitlab.cern.ch/SITECONF/T2_CH_CSCS_HPC

and https://gitlab.cern.ch/SITECONF/T2_CH_CSCS_HPC updated ; clone the repos into a /tmp or /opt dir, make a new branch and try there your changes because Puppet will constantly checkout the Master branch ! LCGTier2/CMSMonitoring

updated ; clone the repos into a /tmp or /opt dir, make a new branch and try there your changes because Puppet will constantly checkout the Master branch ! LCGTier2/CMSMonitoringFully puppet installation

To simplify the cms vo box installation here at CSCS we prepared a puppet recipe to fully install and configure the CMS vo box.VM

cms02.lcg.cscs.ch is a VM hosted on the CSCS vmware cluster For any VirtualHW modification, hard restart, etc. just ping us we have fully access to the admin infrastructure.Hiera config

We use Hiera to keep the puppet code more dynamic, and to lookup some key variables.Puppet module profile_wlcg_cms_vobox

This module configure:- Firewall

- CVMFS

- Frontier Squid

- Xrootd

- Phedex

- cmsd

- xrootd

- frontier-squid (managed by the included frontier::squid module)

Run the installation

Currently we still use foreman to run the initial OS setup: hammer host create --name "cms02.lcg.cscs.ch" --hostgroup-id 13 --environment "dev1" --puppet-ca-proxy-id 1 --puppet-proxy-id 1 --puppetclass-ids 697 --operatingsystem-id 9 --medium "Scientific Linux" --partition-table-id 7 --build yes --mac "00:10:3e:66:00:63" --ip=10.10.66.63 --domain-id 4 If you want reinstall the machine you have yust to set: hammer host update --name cms02.lcg.cscs.ch --build yesPhedex certificate

To update an expired certificate just edit profile_wlcg_cms_vobox::phedex::proxy_cert in the cms02 hiera file. The myproxy user (if you have to re-init the myproxy) is located in the script: https://gitlab.cern.ch/SITECONF/T2_CH_CSCS/edit/master/PhEDEx/tools/cron/cron_proxy.sh Current user is: cscs_cms02_phedex_jpata_2017 and certificate is located here: /home/phedex/gridcert/x509_new The certificate is not managed by puppet because need to be updated by cern myproxy Puppet will automatically pull the repo every 30 min.

Current user is: cscs_cms02_phedex_jpata_2017 and certificate is located here: /home/phedex/gridcert/x509_new The certificate is not managed by puppet because need to be updated by cern myproxy Puppet will automatically pull the repo every 30 min. UMD3

Make sure UMD3 Yum repo are setup : yum install http://repository.egi.eu/sw/production/umd/3/sl6/x86_64/updates/umd-release-3.0.1-1.el6.noarch.rpm FroNtier

FroNtier

THE INFORMATION FOR TESTING IS PARTLY OUTDATED - THEREFORE I ADDED A TODOInstallation

create export FRONTIER_USER=dbfrontier export FRONTIER_GROUP=dbfrontier run installation as described at https://twiki.cern.ch/twiki/bin/view/Frontier/InstallSquid rpm -Uvh http://frontier.cern.ch/dist/rpms/RPMS/noarch/frontier-release-1.0-1.noarch.rpm yum install frontier-squid chkconfig frontier-squid on create folders on special partition mkdir /home/dbfrontier/cache mkdir /home/dbfrontier/log chown dbfrontier:dbfrontier /home/dbfrontier/cache/ chown dbfrontier:dbfrontier /home/dbfrontier/log/

rpm -Uvh http://frontier.cern.ch/dist/rpms/RPMS/noarch/frontier-release-1.0-1.noarch.rpm yum install frontier-squid chkconfig frontier-squid on create folders on special partition mkdir /home/dbfrontier/cache mkdir /home/dbfrontier/log chown dbfrontier:dbfrontier /home/dbfrontier/cache/ chown dbfrontier:dbfrontier /home/dbfrontier/log/ Configuration

edit /etc/squid/customize.shAllow SNMP monitoring of squid service

edit /etc/sysconfig/iptables add: -A INPUT -s 128.142.0.0/16 -p udp --dport 3401 -j ACCEPT -A INPUT -s 188.185.0.0/17 -p udp --dport 3401 -j ACCEPT service iptables reloadTest squid proxy

Log file locations:- /home/squid/log/access.log

- /home/squid/log/cache.log

More...CloseUsing Frontier URL: http://cmsfrontier.cern.ch:8000/FrontierProd/Frontier Query: select 1 from dual Decode results: True Refresh cache: False Frontier Request: http://cmsfrontier.cern.ch:8000/FrontierProd/Frontier/type=frontier_request:1:DEFAULT&encoding=BLOBzip&p1=eNorTs1JTS5RMFRIK8rPVUgpTcwBAD0rBmw_ Query started: 02/29/16 22:32:47 CET Query ended: 02/29/16 22:32:47 CET Query time: 0.00365591049194 [seconds] Query result: eF5jY2BgYDRkA5JsfqG+Tq5B7GxgEXYAGs0CVA== Fields: 1 NUMBER Records: 1

Xrootd

Installation

Install Xrootd on SL6 for dCache according to rpm -Uhv http://repo.grid.iu.edu/osg-el6-release-latest.rpm install packages and copy host certificate/key yum install --disablerepo='*' --enablerepo=osg-contrib,osg-testing cms-xrootd-dcache yum install xrootd-cmstfc yum mount cvmfs and make sure that this file is available /cvmfs/cms.cern.ch/SITECONF/local/PhEDEx/storage.xml ; that's the cvmfs version of https://gitlab.cern.ch/SITECONF/T2_CH_CSCS/raw/master/PhEDEx/storage.xml chkconfig xrootd on chkconfig cmsd on service xrootd start service cmsd start

chkconfig xrootd on chkconfig cmsd on service xrootd start service cmsd start Configuration

Redirector Setup (from June 2013, dCache 2.10)

Detailed reporting requires a plugin on the dCache side Verify that /pnfs is properly mounted both at :- runtime : $ df -h /pnfs Filesystem Size Used Avail Use% Mounted on storage02.lcg.cscs.ch:/pnfs 1.0E 1.7P 1023P 1% /pnfs

- boot time : $ grep pnfs /etc/fstab storage02.lcg.cscs.ch:/pnfs /pnfs nfs rw,intr,noac,hard,proto=tcp,nfsvers=3 0 0

Allow Xrootd requests

edit /etc/sysconfig/iptables, add: -A INPUT -p tcp -m tcp --dport 1094 -m state --state NEW -j ACCEPTTest Xrootd file access

From a UI machine, for instance from PSI or LXPLUS : xrdcp --debug 2 root://cms01.lcg.cscs.ch//store/mc/SAM/GenericTTbar/GEN-SIM-RECO/CMSSW_5_3_1_START53_V5-v1/0013/CE4D66EB-5AAE-E111-96D6-003048D37524.root /dev/null -f xrdcp --debug 2 root://cms02.lcg.cscs.ch//store/mc/SAM/GenericTTbar/GEN-SIM-RECO/CMSSW_5_3_1_START53_V5-v1/0013/CE4D66EB-5AAE-E111-96D6-003048D37524.root /dev/null -f xrdcp --debug 2 root://xrootd-cms.infn.it//store/mc/SAM/GenericTTbar/GEN-SIM-RECO/CMSSW_5_3_1_START53_V5-v1/0013/CE4D66EB-5AAE-E111-96D6-003048D37524.root /dev/null -fPhEDEx on cms02

Read https://twiki.cern.ch/twiki/bin/view/CMSPublic/PhedexAdminDocsInstallation#PhEDEx_Agent_Installation yum install zsh perl-ExtUtils-Embed libXmu libXpm tcl tk compat-libstdc++-33 git perl-XML-LibXML adduser phedex yum install httpd mkdir /var/www/html/phedexlog chown phedex:phedex /var/www/html/phedexlog/ su - phedex mkdir -p state log sw gridcert config chmod 700 gridcert export sw=$PWD/sw myarch=slc6_amd64_gcc461 wget -O $sw/bootstrap.sh http://cmsrep.cern.ch/cmssw/comp/bootstrap.sh sh -x $sw/bootstrap.sh setup -path $sw -arch $myarch -repository comp 2>&1|tee $sw/bootstrap_$myarch.log source $sw/$myarch/external/apt/*/etc/profile.d/init.sh apt-get update apt-cache search PHEDEX|grep PHEDEX version=4.1.3-comp3 apt-get install cms+PHEDEX+$version unlink PHEDEX ln -s /home/phedex/sw/$myarch/cms/PHEDEX/$version/ PHEDEX reset environment (zlib required for git is missing in slc6_amd64_gcc461) cd config # pre gitlab era # git clone https://dmeister@git.cern.ch/reps/siteconf git clone https://dconciat@gitlab.cern.ch/SITECONF/T2_CH_CSCS.git # git clone https://mgila@gitlab.cern.ch/SITECONF/T2_CH_CSCS.git # git clone https://jpata@gitlab.cern.ch/SITECONF/T2_CH_CSCS.git # git clone https://dpetrusi@gitlab.cern.ch/SITECONF/T2_CH_CSCS.git cd .. run ./config/siteconf/T2_CH_CSCS/PhEDEx/FixHostnames.sh to fix hostnames in PhEDEX config get helper scripts from SVN mkdir svn-sandbox cd svn-sandbox svn co https://svn.cscs.ch/LCG/VO-specific/cms/phedex cd .. cp -R svn-sandbox/phedex/init.d ./ cd init.d rm -Rf .svn ln -s phedex_Prod phedex_Debug ln -s phedex_Prod phedex_Dev and copy host certificates mkdir .globus chmod 700 .globus cp /etc/grid-security/hostcert.pem .globus/usercert.pem cp /etc/grid-security/hostkey.pem .globus/userkey.pem chown -R phedex:phedex .globus chmod 600 .globus/* and setup proxy and DB logins touch config/DBParam.CSCS chmod 600 config/DBParam.CSCS # write secret config to file

yum install zsh perl-ExtUtils-Embed libXmu libXpm tcl tk compat-libstdc++-33 git perl-XML-LibXML adduser phedex yum install httpd mkdir /var/www/html/phedexlog chown phedex:phedex /var/www/html/phedexlog/ su - phedex mkdir -p state log sw gridcert config chmod 700 gridcert export sw=$PWD/sw myarch=slc6_amd64_gcc461 wget -O $sw/bootstrap.sh http://cmsrep.cern.ch/cmssw/comp/bootstrap.sh sh -x $sw/bootstrap.sh setup -path $sw -arch $myarch -repository comp 2>&1|tee $sw/bootstrap_$myarch.log source $sw/$myarch/external/apt/*/etc/profile.d/init.sh apt-get update apt-cache search PHEDEX|grep PHEDEX version=4.1.3-comp3 apt-get install cms+PHEDEX+$version unlink PHEDEX ln -s /home/phedex/sw/$myarch/cms/PHEDEX/$version/ PHEDEX reset environment (zlib required for git is missing in slc6_amd64_gcc461) cd config # pre gitlab era # git clone https://dmeister@git.cern.ch/reps/siteconf git clone https://dconciat@gitlab.cern.ch/SITECONF/T2_CH_CSCS.git # git clone https://mgila@gitlab.cern.ch/SITECONF/T2_CH_CSCS.git # git clone https://jpata@gitlab.cern.ch/SITECONF/T2_CH_CSCS.git # git clone https://dpetrusi@gitlab.cern.ch/SITECONF/T2_CH_CSCS.git cd .. run ./config/siteconf/T2_CH_CSCS/PhEDEx/FixHostnames.sh to fix hostnames in PhEDEX config get helper scripts from SVN mkdir svn-sandbox cd svn-sandbox svn co https://svn.cscs.ch/LCG/VO-specific/cms/phedex cd .. cp -R svn-sandbox/phedex/init.d ./ cd init.d rm -Rf .svn ln -s phedex_Prod phedex_Debug ln -s phedex_Prod phedex_Dev and copy host certificates mkdir .globus chmod 700 .globus cp /etc/grid-security/hostcert.pem .globus/usercert.pem cp /etc/grid-security/hostkey.pem .globus/userkey.pem chown -R phedex:phedex .globus chmod 600 .globus/* and setup proxy and DB logins touch config/DBParam.CSCS chmod 600 config/DBParam.CSCS # write secret config to file Cron scripts/cron_restart.sh

Cron scripts/cron_stats.sh

m%d-

m%d- M).txt #source /etc/profile.d/grid-env.sh source /home/phedex/PHEDEX/etc/profile.d/init.sh echo -e generated on `date` "\n------------------------" > $SUMMARYFILE echo "Prod:" >> $SUMMARYFILE /home/phedex/init.d/phedex_Prod status >> $SUMMARYFILE echo "Debug:" >> $SUMMARYFILE /home/phedex/init.d/phedex_Debug status >> $SUMMARYFILE /home/phedex/PHEDEX/Utilities/InspectPhedexLog -c 300 -es "-12 hours" /home/phedex/log/Prod/download-$HOST /home/phedex/log/Debug/download-$HOST >> $SUMMARYFILE 2>/dev/null

M).txt #source /etc/profile.d/grid-env.sh source /home/phedex/PHEDEX/etc/profile.d/init.sh echo -e generated on `date` "\n------------------------" > $SUMMARYFILE echo "Prod:" >> $SUMMARYFILE /home/phedex/init.d/phedex_Prod status >> $SUMMARYFILE echo "Debug:" >> $SUMMARYFILE /home/phedex/init.d/phedex_Debug status >> $SUMMARYFILE /home/phedex/PHEDEX/Utilities/InspectPhedexLog -c 300 -es "-12 hours" /home/phedex/log/Prod/download-$HOST /home/phedex/log/Debug/download-$HOST >> $SUMMARYFILE 2>/dev/null Cron scripts/cron_proxy.sh

Cron scripts/cron_spacemon.sh

Cron scripts/cron_clean.sh

CMS dirs in /pnfs to be regularly cleaned up by a set of crons

Cron final setups

chmod +x scripts/*.sh run config/siteconf/T2_CH_CSCS/PhEDEx/CreateLogrotConf.pl crontab -e 05 0 * * * /usr/sbin/logrotate -s /home/phedex/state/logrotate.state /home/phedex/config/logrotate.conf 13 5,17 * * * /home/phedex/scripts/cron_restart.sh */15 * * * * /home/phedex/scripts/cron_stats.sh 0 */4 * * * /home/phedex/scripts/cron_proxy.sh 0 7 * * * /lhome/phedex/scripts/cron_spacemon.sh and for root crontab -l 30 2 * * * /lhome/phedex/scripts/cron_clean.shX509 Proxy into MyProxy at CERN

setup myproxy service from a UI machine voms-proxy-init -voms cms myproxy-init -s myproxy.cern.ch -l cscs_phedex_cms0X_dm_2014 -x -R "/DC=com/DC=quovadisglobal/DC=grid/DC=switch/DC=hosts/C=CH/ST=Zuerich/L=Zuerich/O=ETH Zuerich/CN=cms0X.lcg.cscs.ch" -c 6400 and copy initial proxy to gridcert/proxy.certPhEDEx DDM stats

PhEDEx locally generated stats

Make a couple of SSH RSA keys [phedex@cms02 ~]$ ssh-keygen -C "To push the cms02 phedex stats into mysql@ganglia:/var/www/html/ganglia/phedex/" -t rsa Make a phedex cron pushing : [phedex@cms02 ~]$ ll /var/www/html/ganglia/phedex/ total 588 -rw-r--r-- 1 phedex phedex 15043 Feb 1 23:45 statistics.DONEm01-HELPM.txt -rw-r--r-- 1 phedex phedex 17526 Feb 2 23:45 statistics.DONEm02-HELPM.txt -rw-r--r-- 1 phedex phedex 14815 Feb 3 23:45 statistics.DONEm03-HELPM.txt ... into mysql@ganglia.lcg.cscs.ch:/var/www/html/ganglia/phedex/ Check if you can browse them : http://ganglia.lcg.cscs.ch/ganglia/phedex/

MyProxy 9.3 Activation Code Full Version

MyProxy एक हल्के Windows अनुप्रयोग के लिए जिसका उद्देश्य है की मदद से आप फिल्टर अपने इंटरनेट ब्राउज़िंग सत्र को अवरुद्ध करके अनचाहे विज्ञापनों और पॉपअप.

एक तरफ अपने विज्ञापन छानने क्षमताओं, उपकरण वादों को बढ़ावा देने के लिए अपने वेब ब्राउज़र गति का उपयोग DNS lookups, अपने इंटरनेट कनेक्शन साझा के साथ अन्य कंप्यूटर लैन में, रीडायल कई लाइनों तक एक कनेक्शन की स्थापना की है, और कितना का ट्रैक रखने के आवागमन और पैसे आप खर्च किया है, हर दिन और महीने में.

उपकरण के रूप में कार्य करता एक प्रॉक्सी के बीच आप और आपके ISP, और accelerates अपने कनेक्शन द्वारा कैशिंग छवियों और छानने विज्ञापनों.

जब आप चलाने के उपयोगिता के लिए पहली बार के लिए, आप कर रहे हैं करने के लिए आवश्यक चरणों का पालन करें में शामिल एक कॉन्फ़िगरेशन विज़ार्ड. आप कर रहे हैं का चयन करने की अनुमति भाषा का चयन करें, अपने इंटरनेट कनेक्शन (डायलअप, GPRS, वीपीएन, DSL के साथ लॉगिन/पासवर्ड, किसी भी कनेक्शन के बिना प्रमाणीकरण मानकों या संयुक्त कनेक्शन के लिए), के रूप में अच्छी तरह से सेट अप के रूप में प्रॉक्सी मानकों (पता, पोर्ट, लॉगिन और पासवर्ड).

MyProxy पाया जा सकता है में बैठे अपने सिस्टम ट्रे क्षेत्र और एक छोड़ दिया पर क्लिक करें, अपनी आइकन देता है आप के लिए त्वरित पहुँच की मुख्य विशेषताएं उपयोगिता, अर्थात् डायलर, बाहर की जाँच करें विन्यास पैनल, शो आँकड़े और इतिहास, देखने के लिए पता लगाया बैनर, के रूप में अच्छी तरह के रूप में बदल कैश और फिल्टर मोड है ।

उपकरण के लिए सक्षम बनाता है एक सूची बनाने के साथ फोन नंबर है कि आप कर सकते हैं आसानी से डायल, स्वचालित रूप से पुन: कनेक्ट करने के बाद एक कस्टम समय, के रूप में अच्छी तरह से सेट अप के रूप में प्रमाणीकरण मानकों (लॉगिन, पासवर्ड और डोमेन).

आँकड़े पैनल के एक सिंहावलोकन प्रदान करता है के साथ अपने कुल इंटरनेट यातायात के लिए वर्तमान दिन, सप्ताह और महीने (भेजने, प्राप्त और कुल किलोबाइट, ऑनलाइन समय, लागत).

क्या अधिक है आप कर सकते हैं देखने के इतिहास के बारे में डेटा उपयोगकर्ता, आईपी पते, यूआरएल, आकार और HTTP, ब्लॉक चयनित यूआरएल को अक्षम कैश प्रक्रिया है, के रूप में अच्छी तरह के रूप में सीमा लॉग आकार डिस्क पर करने के लिए एक उपयोगकर्ता-निर्धारित मूल्य है । इसके अलावा, आप देख सकते हैं के साथ एक सूची से पता लगाया या अवरुद्ध यूआरएल, और ब्लॉक चयनित एक है ।

आप कर सकते हैं आवेदन कैश पाठ, चित्र या अन्य आइटम, ब्लॉक SWF फ़ाइलें, के रूप में अच्छी तरह के रूप में चयन के बीच अलग-अलग विज्ञापन फिल्टर, अर्थात् द्वारा फ़िल्टर सूची या सक्षम इष्टतम या पागल मोड ।

MyProxy सक्षम बनाता है आप को स्वीकार करने के लिए या सभी कुकीज़ को ब्लॉक पर बारी, ऑटो डायल/लटका हुआ मोड, सक्षम यातायात कंप्रेसर, प्रीलोड लिंक, डेटा सेक (और बाहर कई फ़ाइल प्रकार से इस विकल्प है), निर्दिष्ट कैश फ़ोल्डर को समायोजित दर प्रणाली (प्रति घंटा और यातायात दरों में), और सक्षम ध्वनि सूचनाएं ।

सब सब में, MyProxy आता है के साथ एक स्मार्ट सूट के उपकरण के लिए मदद से आप तेजी लाने के अपने इंटरनेट कनेक्शन है, और के लिए उपयुक्त है, उपयोगकर्ताओं के सभी प्रकार की परवाह किए बिना अपने अनुभव के स्तर पर. नकारात्मक पक्ष पर, यह नवीनीकृत नहीं किया गया है एक लंबे समय के लिए और हम अनुभव किया है कीड़े पर विंडोज 8.1 प्रो परीक्षण करते समय, यह के रूप में इसे सील कर दी और कई बार अनुत्तरदायी बन गया है.

MyProxy Scalability Information

MyProxy > Docs > Admin Guide > Scalability

Starting in the MyProxy v0.6.5 release, the myproxy-test command includes a -performance option for performance testing.

In our tests, the myproxy-server gives stable performance until the listen(2) queue (128 entries in Linux) fills up. The myproxy-server accepts each connection, immediately forks a handler, then accepts another connection, so it drains the listen(2) queue efficiently, but if there are enough simultaneous connection attempts, the operating system will start refusing connections until the myproxy-server frees up another entry in the listen(2) queue. The myproxy-server does not crash under heavy load; the operating system simply refuses connections until the myproxy-server is ready to handle another one.

The primary myproxy-server process runs in under 10MB RAM, with approximately 600KB RAM needed for each child process, so the myproxy-server can comfortably serve 128 simultaneous connections using under 100MB RAM.

As an example, running both server and clients on a 3 GHz Pentium 4, we get stable performance of:

- 6 myproxy-inits per second

- one keygen on client-side, one keygen on server-side

- 10 myproxy-get-delegations per second

- one keygen on client-side

- 17 myproxy-change-pass-phrases per second

- key decrypt/encrypt on server-side

- 22 myproxy-infos per second

- the simplest myproxy command

For example, with 100 simultaneous clients, doing 20 operations each, we get:

- 2000 myproxy-inits in 334 seconds.

- 2000 myproxy-get-delegations in 198 seconds.

- 2000 myproxy-change-pass-phrases in 119 seconds.

- 2000 myproxy-infos in 91 seconds.

In our experience, the CPU is the bottleneck doing key generations and key decryption/encryption. Note that for the numbers above, we're running both clients and server on the same machine; server throughput would be better if the clients were running elsewhere. Note also that for the most common operation, myproxy-get-delegation, the CPU-intensive key generation happens on the client-side, so server-side scalability for that operation is much better than reported above.

Also, in our tests, the MyProxy repository scales well to over 100,000 credentials. Disk space required per credential is typically under 10KB, though credential sizes can vary depending on key sizes and certificate extensions. 1GB available disk space is more than enough for typical myproxy-server installations.

We'll update this page with more information as we perform more tests. If you're having scalability problems, please report them.

Last modified 08/20/08.

©2000-2019 Board of Trustees of the University of Illinois.

What’s New in the MyProxy keygen?

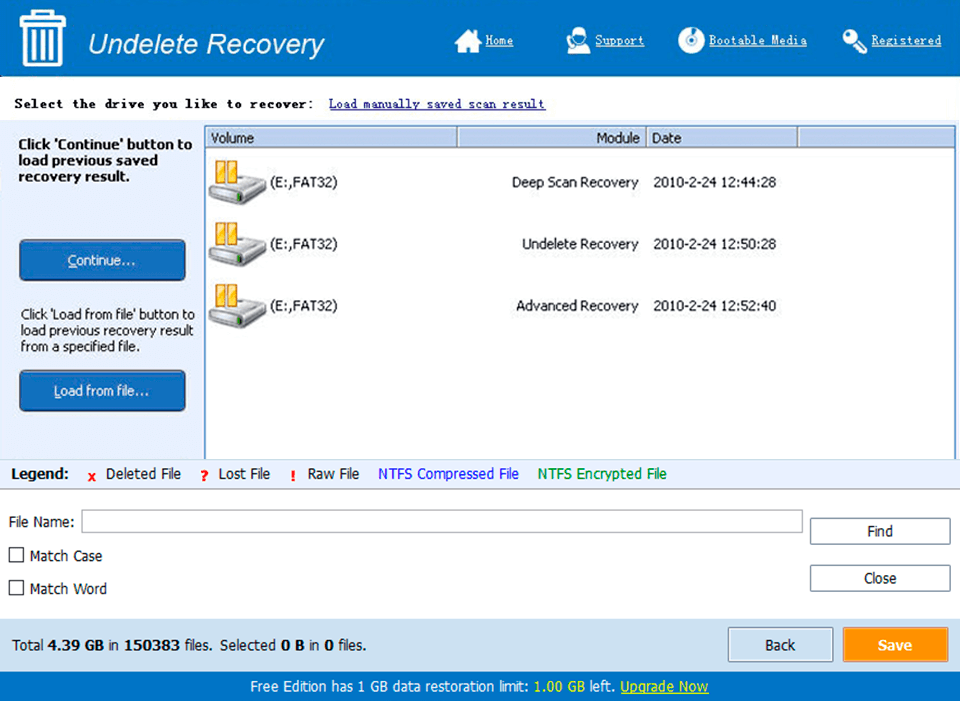

Screen Shot

System Requirements for MyProxy keygen

- First, download the MyProxy keygen

-

You can download its setup from given links: